27 March 2023

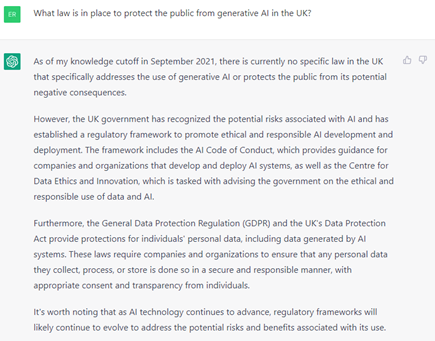

Many business leaders looking to navigate the regulatory environment will understandably struggle to know where to start. While Artificial Intelligence was invented by scientists in the 1950s, we are still working to understand its full potential. To compound this, AI will have the same regulations applied as current tech companies. Many of these companies are also new – and there is fierce debate between Tech companies and regulators on the reach and scope of current and proposed regulation.

AI market size graph

Though AI companies are, by and large, not asking for bespoke legislation yet, they may have no choice in the future. Varying approaches to risk management and AI are currently being pondered by different jurisdictions. The US are focusing more on security risks, the EU more so on ethical and societal implications. The UK set out the National AI Strategy - AI Action Plan in July 2022, which introduced three pillars: investing in the long-term needs of the AI ecosystem; ensuring AI benefits all sectors and regions; and governing AI effectively.

This ten-year plan may need to be ramped up a notch to keep pace with the revolutionary superpower that is AI. Currently, there is a governance framework being set up with involvement of the Cabinet Office’s Central Digital and Data Office, the Centre for Data Ethics and Innovation, the DCMS, DHSC and the Office for AI all working to provide standards to aid with transparency, data protection, safety, ethics, and security.

However, with no comprehensive legal framework designed specifically for this technology currently in place, it is important that any approach considers the safe and accountable deployment of AI systems, in a responsible and ethical way.

Online safety is key

The disclaimer alone for generative AI products won’t be robust enough to protect users and absolve any responsibility to abide by the law.

The Online Safety Bill aims to make the UK the ‘safest place in the world to be online’ and seeks to introduce new laws to protect users in the UK from illegal online search results, eg content relating to terrorism, child sexual exploitation or self-harm. The bill, aiming to keep pace with emerging technology, requires firms to take down illegal search results, and anything that breaches their own terms of service, plus provide tools enabling people to exercise more choice over what content they engage with. This has implications for big social media platforms such as Facebook, YouTube and Twitter, that host user-generated content and allow UK users to communicate through messaging, comments or forums. It will also impact search engines such as Google and Bing. These platforms will be required to remove illegal materials to prevent users from seeing harmful content and to protect them from online scams.

Generative AI is already being used and the space is only set to grow. Businesses will look to incorporate tools into their operations in the form of utilising off-the-shelf AI products, building bespoke tools or both. Boards will need to ensure that the way they use AI complies with current regulation and should monitor upcoming regulation to assess how it will impact their business.

Generative AI is machine learning – from the user and the mass of data it is growing from – so any product will need to seek to understand errors and correct them before they proliferate. There will need to be a fine balance struck when it comes to biases – which becomes a balance between regulation and ethics . We are decades on from when AI was built, but there are still no standardised practices to note where all the data comes from or how it was acquired, let alone control any biases it may form. With the boom in GPU increasing the pace in which this technology infiltrates our lives, we may need much wider legislative changes in the nearer future to address transparency in AI.

Conclusion

Generative AI will disrupt the way people currently engage with search engines and social media as this technology becomes mainstream. The internet is currently rife with illegal content, including fraudulent ads and harmful material, while the learning technology can respond to commands within this proposed legislation, there are reports of the commands being manipulated to provide answers that can violate the proposed legislation. Having an AI product that harvests illegal content could also be catastrophic for the AI maker. Ethical AI is important not only to protect the end user and ensure companies abide by the law, but it’s also integral to the successful roll-out of any new AI product.’

Business leaders will need to approach use of generative AI in a responsible and ethical manner, with consideration given to the potential risks and impacts on their users and customers.